Charles has been writing about games for years and playing…

After years of waiting, we finally have a good solution for the dreaded screen tearing: Adaptive sync technologies. Companies tried to solve this with V-Sync before, but it came with its own set of problems. And after Nvidia’s G-Sync technology came out, AMD did not wait to join the competition and released FreeSync soon after. But how do these two compare? What the differences, pros, and cons?

Table of Contents

ToggleFreesync vs G Sync – Input Lag, Price, Performance Comparison

The Implementation

We have already discussed the implementation of both technologies above, but there are a few things to note. NVIDIA uses proprietary hardware to provide G-Sync. That means it only works with the Nvidia graphics card and is disabled when utilized with other sources.

AMD freesync works on conventional off the shelf components, giving the advantage of allowing companies to easily adapt existing designs and also not increase the costs.

While this sounds amazing in theory, in reality, it means that the implementation of FreeSync depends on the manufacturer. In most cases, monitors only support FreeSync within a restricted range and thus making it less useful.

So, while G-Sync might be more costly, it provides a standardized experience across all monitors.

Connectivity & Input Lag Comparison

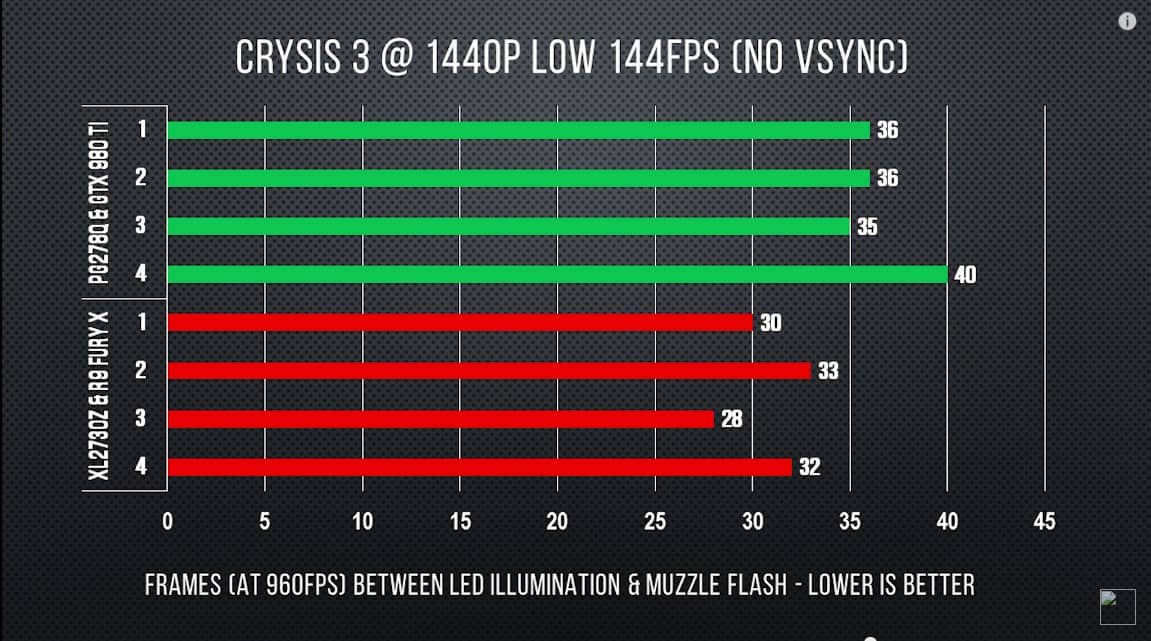

G-Synch screens utilize the same hardware from NVIDIA, designed from scratch to focus on gaming. As such, all displays feature a low input lag. On the contrary, FreeSync displays are in a similar spot as non-adaptive displays.

This does not imply that FreeSync monitors have innately higher lag input, but you will need to go through the reviews for the measurement of input lag before making your buying decision.

However, using non-proprietary hardware has its pros. G-Sync modules just support one to two inputs. Even the new generation ones that feature HDMI as well as DisplayPort 1.2, are still restricted to HDMI 1.4 (which tends to be bandwidth restricted at higher resolutions or refresh rates and ideally does not feature G-Sync functionality).

On the contrary, FreeSync displays can feature as many inputs as possible, including the classic ones like DVI or VGA. However, FreeSync only works on DisplayPort or HDMI. FreeSync, unlike G-Sync, is also supported over HDMI.

The takeaway here is that even though G-Sync gives a low input lag, FreeSync is more versatile, which is ideal if you want to use your display with various devices.

Supported Cards

G-Sync, given its proprietary nature, can only be utilized with NVIDIA Graphics cards. FreeSync, however, was released for open use via the VESA consortium as an endorsed component of the DP interface.

This means anybody, including AMD competitors, Intel and NVIDIA can utilize the open Adaptive-Sync standard. You’ll also need a card that supports FreeSync available for only AMD graphics cards and APU’s and consoles like the Xbox One.

In early 2019, NVIDIA rolled out an update to the GeForce driver, which added support for the DP Adaptive-Sync. This means that anybody with a GTX 10/ 20 series card can enable G-Sync while still connected to a display that is FreeSync compatible via the DisplayPort. Here’s a current list of certified monitors.

The winner here again is FreeSync due to the fact that FreeSync monitors are now more versatile.

Extra Features

FreeSync technology’s primary aim is just to be a more accessible means for VRR, but G-Sync aims at being an ecosystem for the top of the line gaming displays, thus offering the best gaming experience. This does not imply that a FreeSync display cannot match a G-Sync one, but each G-Sync monitor features some bonus features.

Perhaps the most quintessential one is the Ultra Low Motion Blur feature, which is the company’s term for black frame insertion or image flicker. Its purpose is to improve clarity significantly. It might be impossible to use with G-Sync on, but it is an excellent premium feature that is not found in many FreeSync displays.

Variable Overdrive is another feature you will come across on every G-Sync display. Simply put, it adjusts the overshoot setting for every refresh rate in order to keep motion blur at a minimum.

Most displays come with overshoot settings, but they disabled every time you turn on FreeSync. That’s because they are only meant for a specific refresh rate. Up to date, we have not come across a FreeSync screen that includes such a feature.

Recently, NVIDIA and AMD announced new versions of their VRR technologies, G-Sync Ultimate HDR and FreeSync 2, respectively. Basically, G-Sync Ultimate HDR is a certification process for top tier HDR monitors.

FreeSync 2 includes an API that enables graphics cards to control some tone mapping functionalities that are often handled by the monitor itself. According to AMD, this could help decrease input lag. However, the simplicity of these functionalities doesn’t mean there’s a substantial benefit.

When it comes to additional features, G-Sync is the clear winner.

Price & Availability

When comparing these two technologies, chances are you have noticed G-Sync offers a more polished and feature-rich experience than FreeSync, but it ideally comes at a premium.

Ideally, most companies that include G-Sync usually make quasi-identical FreeSync variants utilizing a similar design. This is quite convenient as it makes it easy to make direct price comparisons and know how much the G-Sync feature adds to the price.

When you make a comparison of such monitors, you will come up with an average price difference of $190. This is totally expected as when the initial G-SYNC DIY upgrading kit was first launched, it went for $200. Nvidia lists the compatible options which range from the Titan X and 1080 Ti all the way down 1050, which retails for as little as $150.

Another important thing to keep in mind is FreeSync’s wide support, due to its low cost of implementation. As such, we wouldn’t be reaching to predict that your average everyday monitor will feature some kind of Adaptive-Sync, while NVIDIA’s G-Sync will be reserved for high-end select gaming displays.

Nowadays, you will also come across FreeSync in a wide array of TVs. It will also become a fundamental part of the new HDMI 2.1 displays.

The winner here is clearly, FreeSync. As a result of its minimal additional costs and open nature, FreeSync displays are easier to find and are always less pricey compared to their G-Sync counterparts.

How Adaptive Sync Technology Improves the Performance

First, let’s talk about what screen tearing is. While playing video games, your computer tries to create as many frames per second (FPS) as possible. But your monitor has a set refresh rate, 60Hz for example. This means your monitor refreshes the screen 60 times per second.

There is no problem when your computer matches this and creates 60 FPS but when there is a mismatch, what we call “screen tearing” happens. This causes the next image to come on top of the current image and creates horizontal lines that separate the images.

It is probably the most annoying thing after having sub-30 framerates. V-Sync tried to combat this, but since this option tries to solve screen tearing by commanding the last image to wait for the new image, it usually ends with more input lag and stuttering.

Adaptive sync solves this by using a very simple idea: It matches your monitor’s set refresh rate with your current FPS. If you are running the game with 45 FPS, your monitor will run at the 45Hz refresh rate. Simple, yet very effective. Once you play a game with it, you cannot go back.

AMD FreeSync: Pros and Cons

AMD uses the Adaptive-Sync open standard in DisplayPort 1.2a and later versions. Since it does not require a proprietary module like Nvidia’s G-Sync, it is much more affordable.

AMD also does not charge licensing fees to manufacturers, so FreeSync exists in a much broader range of monitors and they are cheaper. This is FreeSync’s strongest point and for this reason alone, a lot of gamers on a budget go for AMD options. Especially after AMD managed to implement FreeSync over HDMI, our options became even broader.

FreeSync monitors also have many more options when it comes to connectivity. FreeSync monitors tend to have a full selection of ports. G-Sync displays are usually limited to DisplayPort and HDMI only since G-Sync module restricts the outputs which a G-Sync monitor can support.

This comes with a cost, though. Monitors that have FreeSync technology usually have a narrower frame-rate range. Anything lower than the lowest limit will cause FreeSync to stop working and you will start experiencing screen tearing again.

To combat this, AMD launched a new feature called Low Framerate Compensation (LFC). Monitors with LFC duplicate frames when FPS is below the FreeSync lower limit, enabling it to enter the FreeSync range. But this means more features to look for when searching for FreeSync monitors.

To see whether your AMD GPU supports FreeSync or not, go to AMD’s FreeSync page to see the full list of supported graphics cards.

Nvidia G-Sync: Pros and Cons

Nvidia is much more strict about their approach to adaptive sync. They work closely with display manufacturers during the development and these manufacturers need to pass the certification process. Also, Nvidia charges monitor makers for the chip that handles the G-Sync and this is reflected in prices. Also, the narrower selection of inputs can turn off some users.

Nvidia makes up for these cons, though. Every G-Sync monitor supports the equivalent of AMD’s Low Framerate Compensation, so you will not have to look for it separately. All G-Sync monitors also have “frequency dependent variable overdrive” which prevents ghosting on G-Sync displays. Ghosting is another significant problem gamers face, especially with monitors with higher response times.

Plus, some of the monitors that have G-Sync include Ultra Low Motion Blur, which effectively combats motion blur at very high refresh rates. It is really useful in competitive gaming where extremely high refresh rates are used. You can’t use G-Sync and ULMB concurrently, though.

Conclusion:

While there are some important differences, both G-Sync and FreeSync work relatively the same, and the choice is up to you. If you already own a strong graphics card, you will go for that company’s adaptive sync technology anyways since neither works with its rival’s graphics cards. Nvidia owners need a G-Sync monitor, and AMD owners need a FreeSync monitor.

However, if you do not care about the monetary aspect, it really does not matter that much. Even though G-Sync monitors usually make up for their higher price with generally better performance, if you do enough research and find a FreeSync monitor with LFC and wide refresh-rate range, you can achieve the same experience with G-Sync.

If you are a competitive gamer who plays games on extreme refresh rates like 240Hz, Nvidia’s ULMB can convince you to go for G-Sync. No matter what you choose, after you have used a Gaming monitor with either of these two technologies, you will be amazed and won’t want to go back!

Charles has been writing about games for years and playing them all his life. He loves FPS, shooters, adventure games like Dota 2, CSGO and more.